How to Get Started with Iceberg Tables in Snowflake

At Greybeam, we're constantly exploring ways to optimize Snowflake, and I'm so thrilled that our journey led us to Iceberg and DuckDB. In this post, we'll cover the basics on how to get started with Iceberg tables on Snowflake using Amazon S3. We'll save a deeper dive including setting up different catalogs and hooking up DuckDB for a later post.

What does Iceberg unlock?

Let me provide a brief refresher on Iceberg. Iceberg is a table format. A table format describes how to organize entire tables and their files at a system level - including how the files are stored, how changes to the table are tracked over time, and how the table's metadata is managed.

Iceberg tables are usually stored in cloud object storage like Amazon S3. This is an important feature because, with this approach, data is no longer gated behind Snowflake's proprietary storage layer. In other words, we can run queries against our data using Snowflake, Databricks, BigQuery, DuckDB, or whichever query engine we choose without first having a copy of the data in that warehouse.

Before setting up Snowflake external volumes with AWS, you'll need:

- Admin access to your Snowflake account.

- AWS IAM permissions to create roles and policies.

- An S3 bucket where your Iceberg tables will reside.

Setting Up

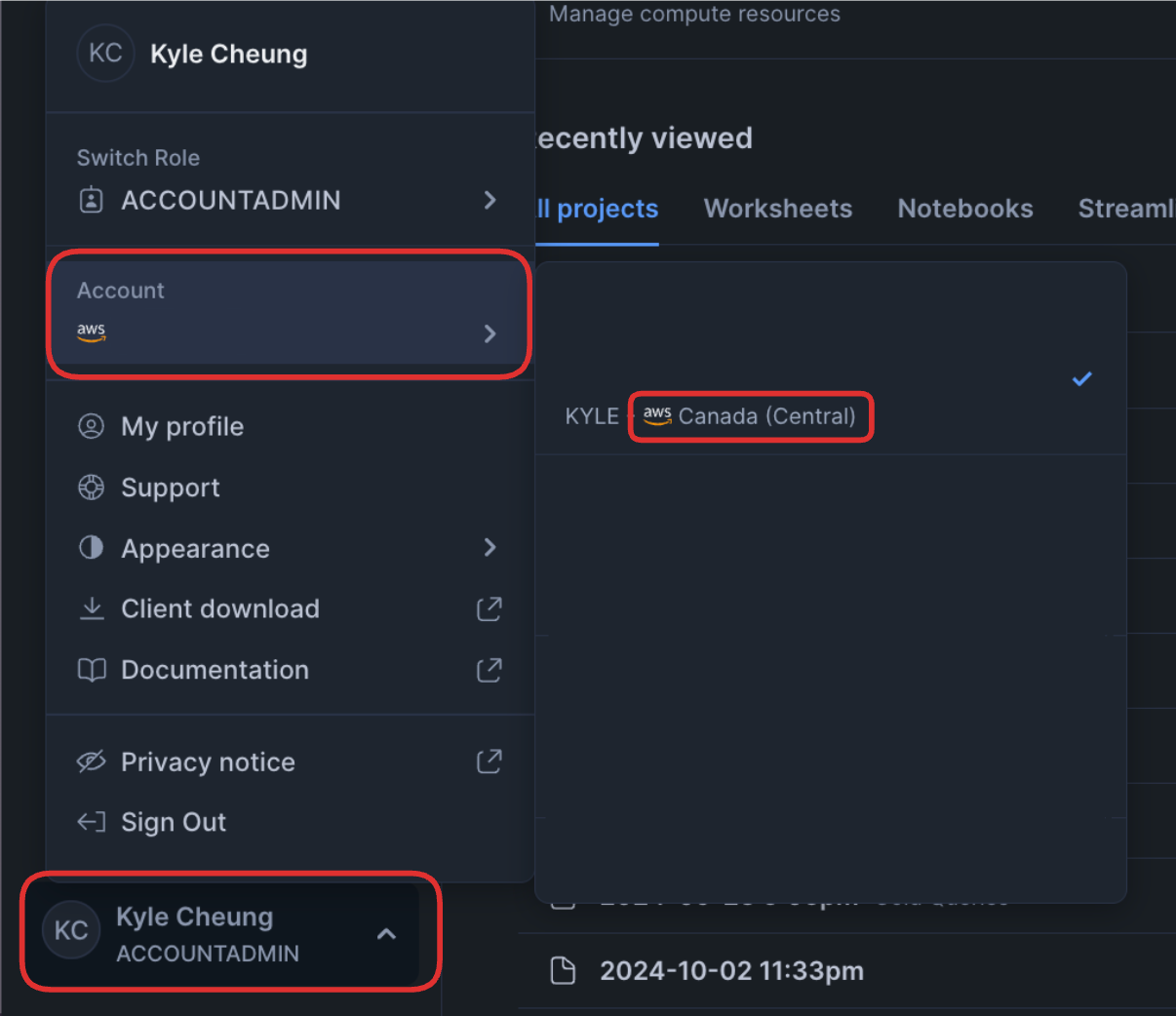

Make note of both the region and cloud provider that your Snowflake account uses, as you will be charged data transfer fees if data moves between different cloud regions or cloud providers. In our example, our Snowflake account is setup in ca-central-1

Amazon S3

In this guide, we're using Amazon S3, but you can also use Google Cloud Storage or Azure Blob Storage, though instructions may differ. Relevant Snowflake docs can be found here.

Creating a bucket

Login to AWS, navigate to S3 and ensure your region matches what you see in your Snowflake account. In our case we want ca-central-1.

If, for example, I had set my S3 bucket to another region such as us-east-1 , then I will be charged data transfer fees since the regions between my S3 bucket and my Snowflake account are different.

Navigating to S3.

Name your bucket, but do not use any dots in the name as Snowflake does not support bucket names containing dots (for example greybeam.demo.bucket).

We'll name ours greybeam-demo-bucket.

Creating a bucket.

Enabling Security Token Service

This step configures access permissions for Snowflake. First we need to ensure that the security token service (STS) is enabled for your Snowflake region. STS is used to create and provide trusted users with temporary security credentials that can control access to your AWS resources. In AWS:

- Navigate to IAM

- In the left panel select Account Settings

- Scroll down to STS

- Toggle your Snowflake region to Active

Activating security token service.

Creating an IAM Policy

In the same IAM page, select Policies on the left sidebar and create a policy. In the policy page select JSON and paste in the policy JSON below, replacing greybeam-demo-bucket with your bucket name.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowPlatformIcebergBucket",

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::greybeam-demo-bucket",

"arn:aws:s3:::greybeam-demo-bucket/*"

]

}

]

}IAM policy for Snowflake.

Specifying "Action": "s3*" simply allows this policy to create, retrieve, and delete objects from the specified resource.

Creating an IAM policy.

Creating an IAM Role

Create a role and attach the policy from above to that role. This grants the role privileges to the S3 bucket we just created and will allow the role to read, write, and delete in the S3 bucket.

In the left sidebar:

- Select Roles.

- Create Role.

- Select AWS Account.

- Enable the option Require External ID, we'll enter

greybeam_iceberg_demo_external_id. Think of the external ID like a special password that Snowflake needs to access your S3 bucket - without it, Snowflake might accidentally grab data from the wrong customer's bucket, kind of like making sure your house key only opens your house and not your neighbor's. - In the Add Permissions page, we find the policy we just created

greybeam-demo-policy, and select the checkbox to the left. - Name your role and click create.

Creating an IAM role.

After you create your role, select it and copy the ARN in the right-side of the Summary pane, you'll need this for the Snowflake section below.

Getting the ARN.

Snowflake

Now that the hard part is done, all we need to is point Snowflake to our new S3 bucket with the correct IAM role and ID, setup the correct grants, and start playing around with Iceberg tables in Snowflake.

Make sure you're using the correct Snowflake roles in your organization to execute the code below.

Pointing Snowflake to S3

An external volume stores the IAM entity for your S3 bucket. Snowflake uses the IAM entity to securely connect to your storage for accessing table data, Iceberg metadata, and manifest files that store the table schema, partitions, and other metadata.

CREATE OR REPLACE EXTERNAL VOLUME greybeam_iceberg_demo_volume

STORAGE_LOCATIONS =

(

(

NAME = 'greybeam_demo_volume'

STORAGE_PROVIDER = 'S3'

STORAGE_BASE_URL = 's3://greybeam-demo-bucket/iceberg'

STORAGE_AWS_ROLE_ARN = 'arn:aws:iam::588738587852:role/greybeam_demo_role'

STORAGE_AWS_EXTERNAL_ID = 'greybeam_iceberg_demo_external_id'

)

);Let's breakdown this down:

STORAGE_BASE_URL = 's3://greybeam-demo-bucket/iceberg'think of this as the path to the folders where your Iceberg data is stored. We added a subfolder/icebergso that whenever we create a table using the volumegreybeam_iceberg_demo_volumeit will be created in the/icebergsubdirectory. There's a future where we might want to store parquet files in the same bucket but under a/parquetsubdirectory instead.STORAGE_AWS_ROLE_ARNthis is a value copied from the final step of the previous S3 instructions.STORAGE_AWS_EXTERNAL_IDthis is the external ID you entered into the IAM role creation.

Snowflake <> AWS Trust Policy

Finally, setup the trust policy between AWS and Snowflake. A trust policy is like a contract that tells AWS to let Snowflake (using its specific account number) access the role we defined, but only when the correct external ID is provided.

We need to retrieve the IAM user from Snowflake and set it in our IAM role in AWS. To retrieve the IAM user from Snowflake run the command below:

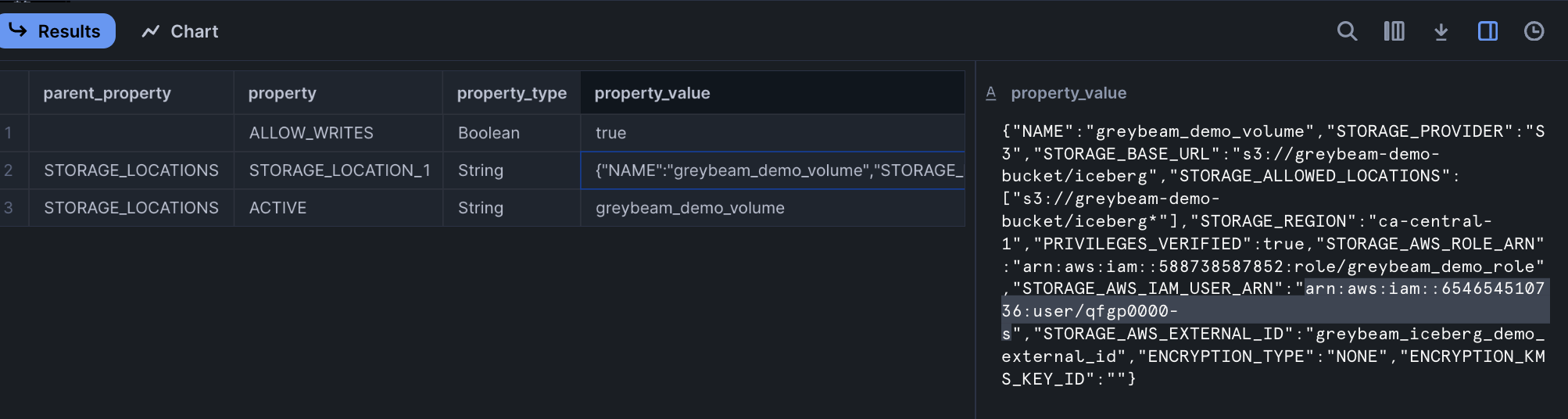

DESC EXTERNAL VOLUME greybeam_iceberg_demo_volume;You will need to record the value for the key STORAGE_AWS_IAM_USER_ARN; in our example it's, arn:aws:iam::654654510736:user/qfgp0000-s.

Input this value into the JSON in IAM roles -> Trust Relationships -> AWS.

Setting the Snowflake AWS Trust Policy

Grant Usage to the External Volume

In Snowflake, you may want specific roles to be able to create Iceberg tables in the S3 bucket we've defined. The command below will grant usage on that bucket to other roles.

GRANT USAGE ON EXTERNAL VOLUME greybeam_iceberg_demo_volume TO ROLE greybeam_role;Create Iceberg Tables

Now you can create Iceberg tables in Snowflake.

CREATE OR REPLACE ICEBERG TABLE line_item

BASE_LOCATION='line_item'

EXTERNAL_VOLUME=greybeam_iceberg_demo_volume

CATALOG=SNOWFLAKE

AS (

SELECT * FROM SNOWFLAKE_SAMPLE_DATA.TPCH_SF1.LINEITEM

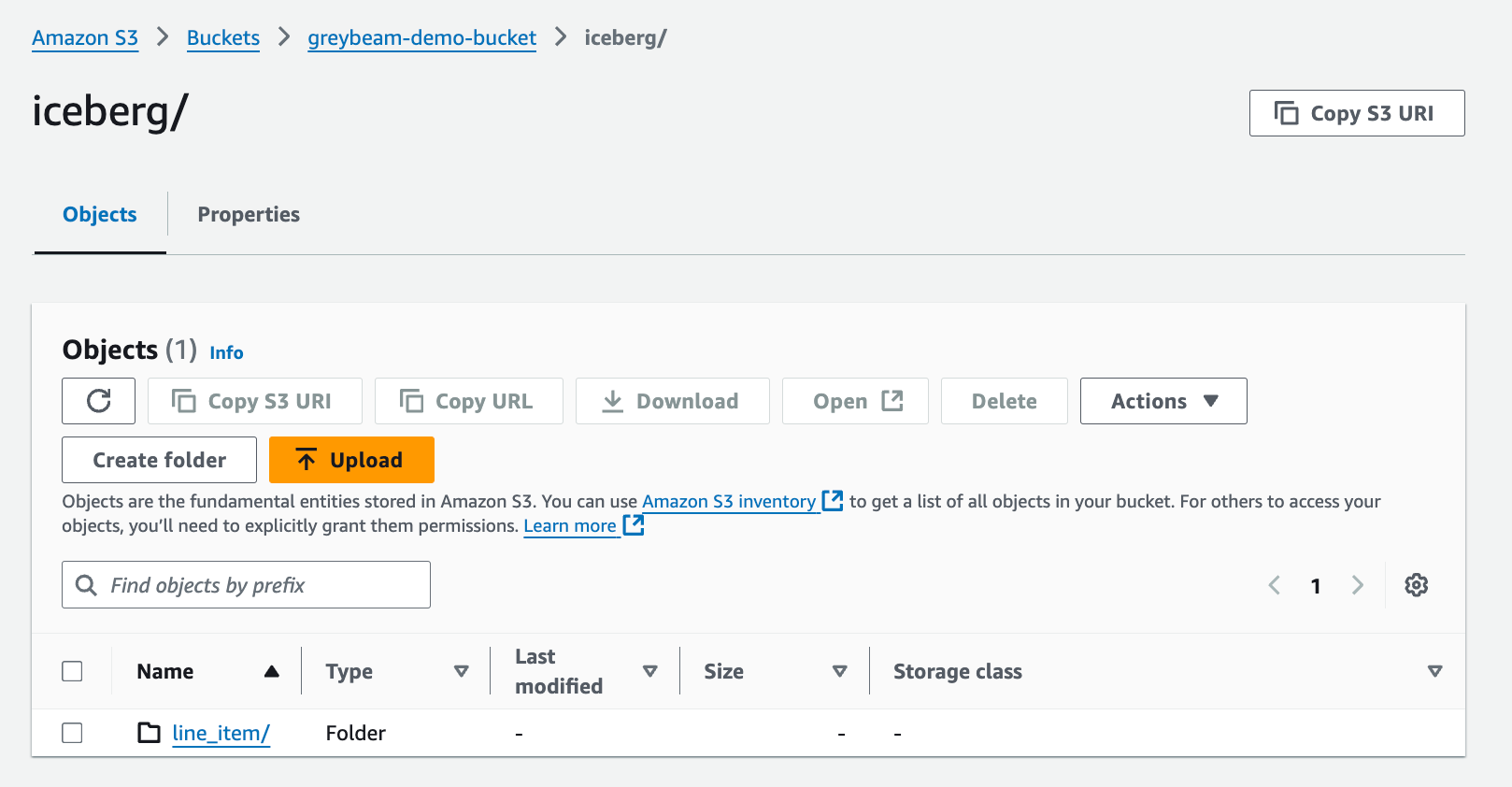

);Each Iceberg table will appear as a folder in the STORAGE_BASE_URL directory with the name specified in the BASE_LOCATION parameter of the create table statement.

Comments ()