Understanding Snowflake Compute Costs and Credit Consumption

When it comes to managing your Snowflake expenses, understanding how compute costs are calculated and optimized is crucial. In this comprehensive guide, we’ll break down the intricacies of Snowflake’s billing model and provide actionable insights on how you can optimize your usage to keep costs under control while maximizing performance.

The Basics: Snowflake Credits and Billing Model

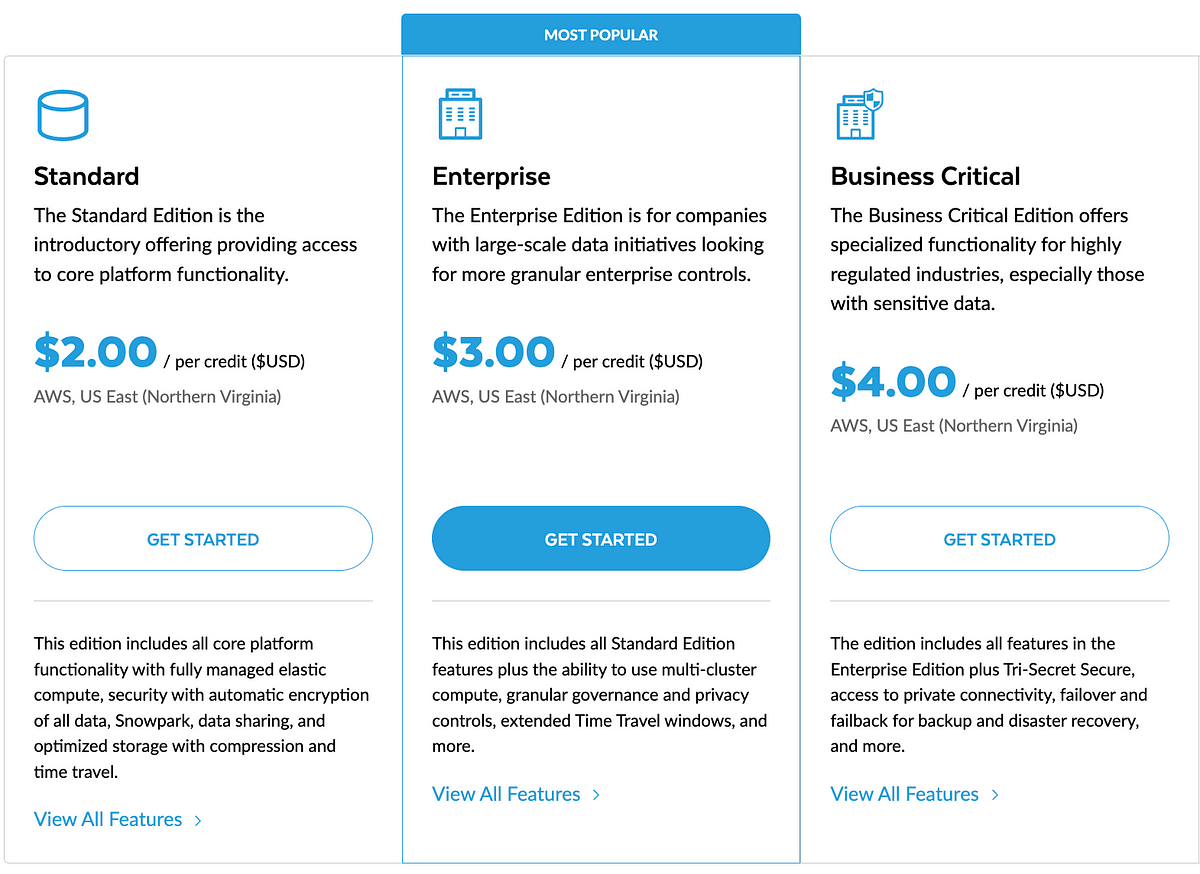

Snowflake’s billing model revolves around the concept of credits. A credit is the unit of measurement for compute resources consumed in Snowflake. The cost of a credit varies based on your specific agreement with Snowflake.

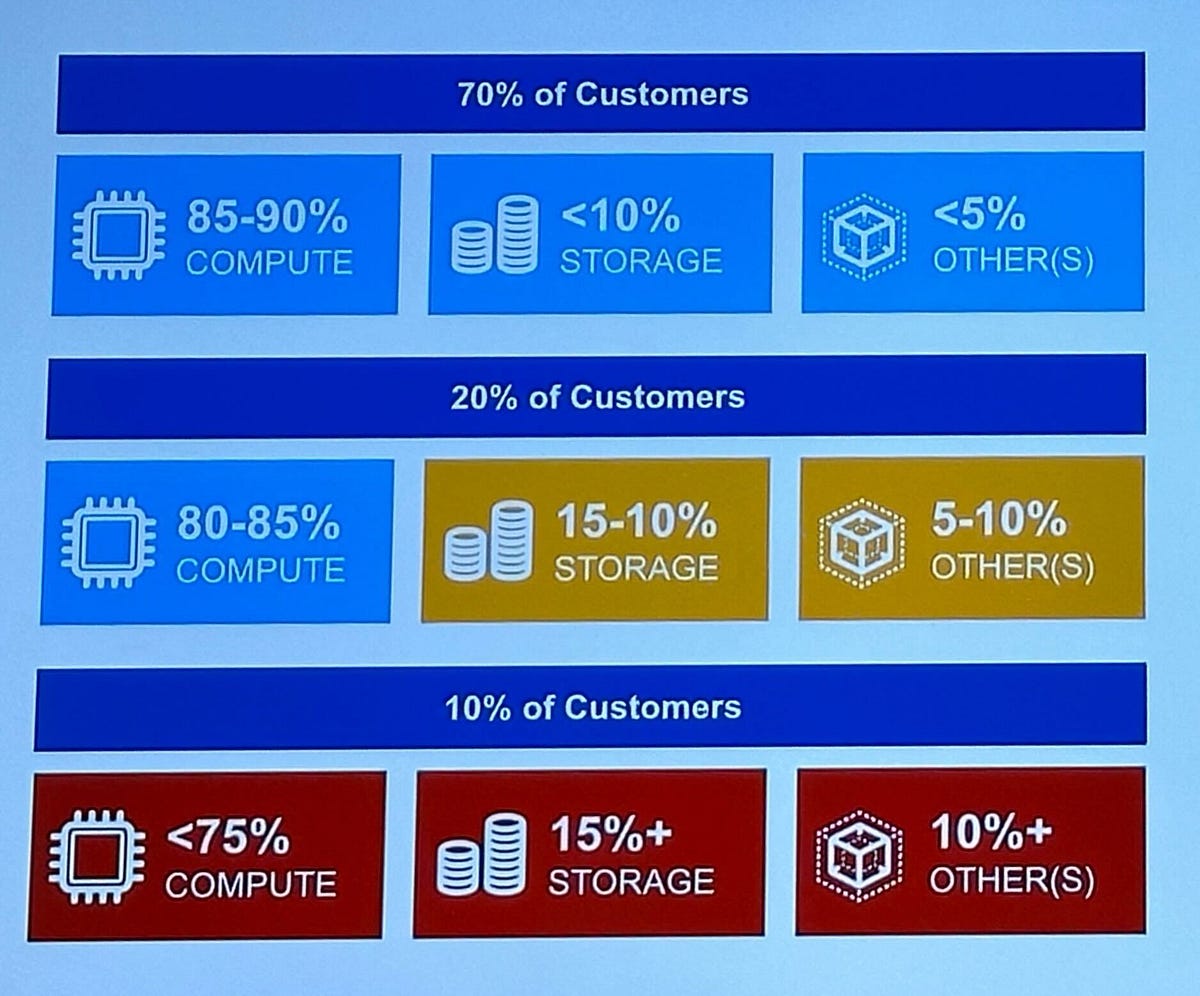

Understanding how credits are consumed is key to managing your expenses. Snowflake charges for three main components (and we’ll dive into each below):

- Compute (Virtual Warehouses)

- Storage

- Cloud Services

Additionally, you may incur charges for data transfer and serverless features.

How Virtual Warehouses Consume Credits and Scale Performance

The primary consumer of credits in Snowflake is the virtual warehouse. A virtual warehouse is a cluster of compute resources used to execute SQL queries, load data, and perform other data processing tasks.

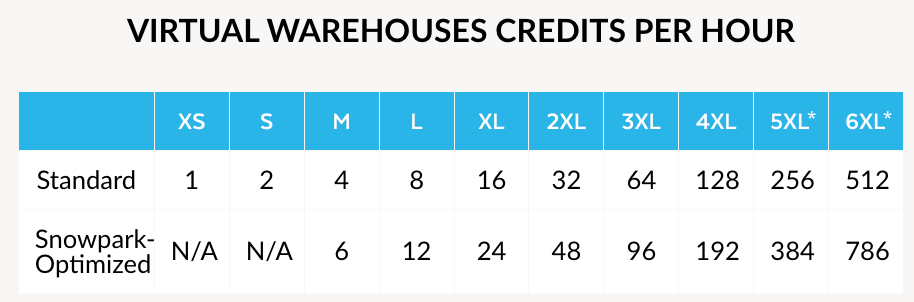

Size Matters: Snowflake offers various sizes, from X-Small to 6X-Large. Each step up doubles the number of servers and credit consumption rate. Performance typically scales linearly with size for most operations, though there’s usually some ceiling where this declines. This means that a query that takes 1 hour on an X-Small warehouse might take 30 minutes on a Small warehouse, 15 minutes on a Medium warehouse, and so on. You’ll need to test cost against performance for your specific workloads.

- Time-Based Billing: Credits are consumed on a per-second basis rounded up, with a 60-second minimum. This means even if your query runs for just 10 seconds, you’ll be billed for a full minute.

- Auto-suspend and Auto-resume: To minimize unnecessary credit consumption, Snowflake provides auto-suspend and auto-resume features. These automatically pause and restart warehouses based on activity.

-- Example: Creating a Medium-sized warehouse with auto-suspend and auto-resume

CREATE WAREHOUSE ANALYTICS_WH

WAREHOUSE_SIZE = 'MEDIUM' -- Consumes 4 credits per hour

AUTO_SUSPEND = 60 -- Warehouse will turn off after 60 seconds of inactivity

AUTO_RESUME = TRUE -- Will automatically restart when there's activity

INITIALLY_SUSPENDED = TRUE;Storage Costs

While compute often dominates Snowflake bills, storage costs shouldn’t be overlooked. Snowflake charges a monthly fee based on the average amount of data stored. To optimize storage costs:

- Leverage data compression

- Remove or archive unused data

- Use transient and temporary tables where appropriate

Cloud Services and Serverless Features

Credits consumed on the Snowflake admin panel is slightly inflated as cloud services are typically bundled in your credit consumption. Cloud services handle tasks like query optimization and metadata management. Typical utilization (up to 10% of daily compute credits) is included for free.

Serverless features like Snowpipe, materialized views maintenance, and automatic clustering consume additional credits when used.

Optimizing Snowflake Costs

1. Right-size Your Warehouses

Use appropriately sized warehouses for your workloads. Bigger isn’t always better if it leads to idle resources. We’ll have an in-depth article on how to right-size your warehouses in the future so be sure to subscribe!

2. Leverage Auto-suspend and Auto-resume

Configure these features to automatically shut down idle warehouses and restart them when needed. Rule of thumb is usually 60 seconds, but it depends on your use case. Keep in mind that when a warehouse suspends, it will lose its cached results.

ALTER WAREHOUSE my_warehouse

SET AUTO_SUSPEND = 60

AUTO_RESUME = TRUE;3. Implement Resource Monitors

Set up resource monitors to alert you or automatically suspend warehouses when credit consumption reaches certain thresholds.

CREATE RESOURCE MONITOR credit_limit_monitor

WITH CREDIT_QUOTA = 1000

TRIGGERS

ON 75 PERCENT DO NOTIFY

ON 90 PERCENT DO NOTIFY

ON 100 PERCENT DO NOTIFY;

-- We don't recommend suspending on any warehouses that run production jobs

-- As this may impact your pipelines!4. Optimize Queries

Well-written queries that leverage Snowflake’s architecture can significantly reduce compute time and credit consumption. Key strategies include:

- Use appropriate filtering to leverage partition pruning

- Avoid unnecessary JOINs

- Leverage materialized views for frequently-accessed data

We talk about how to use Snowflake’s query profile to optimize queries here.

5. Monitor and Analyze

Regularly review your credit consumption patterns using Snowflake’s built-in monitoring tools or third-party solutions. Use query tags to track and analyze usage by different teams or workloads.

-- Query tag specific users

ALTER USER kcheung SET QUERY_TAG = '{"team": "analytics", "user": "kcheung"}';

-- Query tagging a specific session

ALTER SESSION SET QUERY_TAG = 'MARKETING_DASHBOARD';We’re launching our free Query Cost and Performance dashboard on the Snowflake marketplace in the next few weeks that will bring visibility into all things credit consumption.

6. Optimize Data Storage

- Cluster large tables on frequently filtered columns

- Use the Search Optimization Service for large tables with point lookup queries

- Consider using materialized views for complex, frequently-run queries

Conclusion

Optimizing Snowflake costs requires a holistic approach, considering compute, storage, and data transfer. By right-sizing warehouses, leveraging auto-suspend features, optimizing queries, and continuously monitoring usage, you can ensure you’re getting the most value out of your Snowflake investment while keeping costs under control.

Remember, it’s not just about cutting costs — it’s about optimizing for both performance and efficiency. Regular review and adjustment of your Snowflake usage will help you maintain this balance as your data needs evolve.

Is Snowflake Expensive?

All usage-based cloud platforms can get expensive when not used carefully. There are a ton of controls teams can fiddle with to get a handle on their Snowflake costs. At Greybeam, we’ve built a query performance and observability platform that automagically optimizes SQL queries sent to Snowflake, saving you thousands in compute costs. Reach out to k@greybeam.ai to learn more about how we can optimize your Snowflake environment.

Comments ()